Big Data Hadoop Certification Training Course in Bangalore

Get Hadoop certification in 6 weeks, Bigdata Hadoop course is delivered by a working professional with excellent practical experience.

2000+ Ratings

3000+ Happy Learners

Big Data Hadoop Videos

Skills Covered in Big Data Hadoop

Training Key Features

Our Alumni Working in

Course Reviews

Firstly I thank Apponix, for giving such a best training and knowledge for everyone here. Secondly I thank my trainer for sharing his knowledge to us. He gave his best in explaining all the topics with understandably. I'm very thankful for training under him. I recommend others to go here for getting best training.

Class is really well organized and the tutor holds very well knowledge on the subject and explains in-depth with good examples. I thank you a lot for helping me overcome the fear of programming and learn Big Data Hadoop from very basic.

Apponix is the best training center... Apponix is proud to say that one of the best training center providers teaches us to every concept of Big Data Hadoop...this is the best training center.

My experience is good with Apponix Bangalore, I have done my Hadoop with Spark scala training a month ago. These courses have helped me to gain that competitive edge that is required at the job. Thank u so much all Apponix Bangalore members for providing support.

I have recently completed Hadoop training at Apponix. I took the course on Bigdata and Hadoop, it is really helpful in understanding the overall ecosystem. Especially the good thing is the faculty is a certified expert with industry experience. Hadoop trainer's way of teaching and the entire program structure is very good.

I have taken Big Data Hadoop and Spark course at Apponix. I found the trainer very knowledgeable, and all the courses that I enrolled in were delivered in a very detailed and professional manner. For any person looking for Hadoop training, you can join Apponix without hesitation.

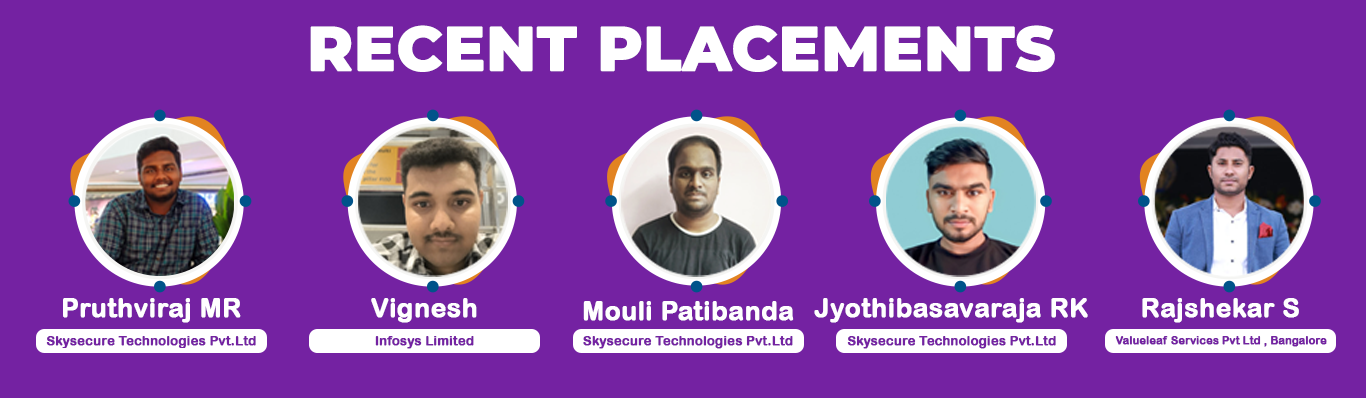

Our Recent Placements

Classroom Training

Classroom Training

Fees & Training Options in Bangalore

Class room Training

₹

- Interactive Classroom Training Sessions

- 60+ Hrs Practical Learning

- Guaranteed 5 Interview Arrangements

- Excellent Classroom Infrastructure

- Assured Job Placement

- Delivered by Working Professionals

Online Training

₹

- Flexible class schedules

- Access to recorded sessions of live classes for reference.

- Limited seats to maximize interactions and minimize distractions.

- Online classes will be taken by industry veterans associated with MNCs.

Big Data Hadoop Training Syllabus

Pre-requisites

This course is meant for –

- Junior IT professionals

- Software Developers

- Software Architects

- Senior IT professionals

- Software testers

- Mainframe professionals

- Business Intelligence professionals

- IT Project Managers

- Data Scientists

- Data Management experts

- Data Analytics professionals and

- IT Graduates.

- Prerequisites for this course are as follows –

- The applicant must have a grasp of Core Java and SQL.

Course Syllabus

- Introduction to Data and System

- Types of Data

- Traditional way of dealing large data and its problems

- Types of Systems & Scaling

- What is Big Data

- Challenges in Big Data

- Challenges in Traditional Application

- New Requirements

- What is Hadoop? Why Hadoop?

- Brief history of Hadoop

- Features of Hadoop

- Hadoop and RDBMS

- Hadoop Ecosystem’s overview

- Installation in detail

- Creating Ubuntu image in VMware

- Downloading Hadoop

- Installing SSH

- Configuring Hadoop, HDFS & MapReduce

- Download, Installation & Configuration Hive

- Download, Installation & Configuration Pig

- Download, Installation & Configuration Sqoop

- Download, Installation & Configuration Hive

- Configuring Hadoop in Different Modes

- File System - Concepts

- Blocks

- Replication Factor

- Version File

- Safe mode

- Namespace IDs

- Purpose of Name Node

- Purpose of Data Node

- Purpose of Secondary Name Node

- Purpose of Job Tracker

- Purpose of Task Tracker

- HDFS Shell Commands – copy, delete, create directories etc.

- Reading and Writing in HDFS

- Difference of Unix Commands and HDFS commands

- Hadoop Admin Commands

- Hands on exercise with Unix and HDFS commands

- Read / Write in HDFS – Internal Process between Client, NameNode & DataNodes

- Accessing HDFS using Java API

- Various Ways of Accessing HDFS

- Understanding HDFS Java classes and methods

- Commissioning / DeCommissioning DataNode

- Balancer

- Replication Policy

- Network Distance / Topology Script

- About MapReduce

- Understanding block and input splits

- MapReduce Data types

- Understanding Writable

- Data Flow in MapReduce Application

- Understanding MapReduce problem on datasets

- MapReduce and Functional Programming

- Writing MapReduce Application

- Understanding Mapper function

- Understanding Reducer Function

- Understanding Driver

- Usage of Combiner

- Usage of Distributed Cache

- Passing the parameters to mapper and reducer

- Analysing the Results

- Log files

- Input Formats and Output Formats

- Counters, Skipping Bad and unwanted Records

- Writing Join’s in MapReduce with 2 Input files. Join Types

- Execute MapReduce Job - Insights

- Exercise’s on MapReduce

- Hive concepts

- Hive architecture

- Install and configure hive on cluster

- Different type of tables in hive

- Hive library functions

- Buckets

- Partitions

- Joins in hive

- Inner joins

- Outer Joins

- Hive UDF

- Hive Query Language

- Pig basics

- Install and configure PIG on a cluster

- PIG Library functions

- Pig Vs Hive

- Write sample Pig Latin scripts

- Modes of running PIG

- Running in Grunt shell

- Running as Java program

- PIG UDFs

- Install and configure Sqoop on cluster

- Connecting to RDBMS

- Installing Mysql

- Import data from Mysql to hive

- Export data to Mysql

- Internal mechanism of import/export

- HBase concepts

- HBase architecture

- Region server architecture

- File storage architecture

- HBase basics

- Column access

- Scans

- HBase use cases

- Install and configure HBase on a multi node cluster

- Create database, Develop and run sample applications

- Access data stored in HBase using Java API

- Map Reduce client to access the HBase data

- Resource Manager (RM)

- Node Manager (NM)

- Application Master (AM)

+91 80505-80888

Our Top Instructors

Overview of Big Data Hadoop Course in Bangalore

This course in Bangalore will make you a master of Hadoop framework concepts. It will also teach you about the tools and methodologies used in Big Data.

With the help of this course, you will be able to progress your IT career as a Big Data Developer.

Furthermore, the course curriculum also covers Big Data essentials like Spark applications, functional programming and parallel processing.

Benefits of learning Big Data Hadoop

When you choose to learn Big Data Hadoop, you are future-proofing your IT career.

As per market insights, Big Data has already surpassed the USD 7.35 Billion mark and in the coming years, it is expected that this number will multiply by several folds.

With the widespread adoption of Big Data and Hadoop by SMEs and established brands, it is no news that taking this course will help you and will not be a burden.

Related job roles

- Hadoop Developer

- Hadoop Admin

- Hadoop Architect

- Big Data Analyst

- Data scientist

Big Data Hadoop Course

Is this course enough to pass the certification exam?

Yes, the course curriculum followed in this course is enough to make you ready to take and ace the CCA175 Hadoop certification exam.

What are the applications of Big Data and Hadoop?

Hadoop is an open-source platform. It is meant for storing data. It runs on hardware clusters and offers immense data processing capabilities. It is preferred by businesses and brands as it can run numerous tasks simultaneously.

Why Should You Learn Big Data?

- Most demanding career with promising opportunities

- Big Data find its implementation in new areas each day. And its implementation in the fields of AI, Robotics, Web Development, DevOps and so on, has considerably increased the credibility and demand of Big Data Hadoop professionals

- Most preferred, premier and flexible language which is of open source form

Big Data Hadoop Course

What does Big Data mean?

Big Data is the process of analyzing structured, unstructured, and semi-structured data. The incoming data could be in the form of videos, texts, and images generated by every individual using the internet every day. The purpose of Big Data is to provide brands and businesses actionable insights so that they can make better business-critical decisions.

The purpose of Big Data is to provide brands and businesses actionable insights so that they can make better business-critical decisions.

The purpose of Big Data is to provide brands and businesses actionable insights so that they can make better business-critical decisions.

What is Hadoop?

It is an open-source framework meant for data storage and processing. It is preferred by enterprises that rely on Big Data.